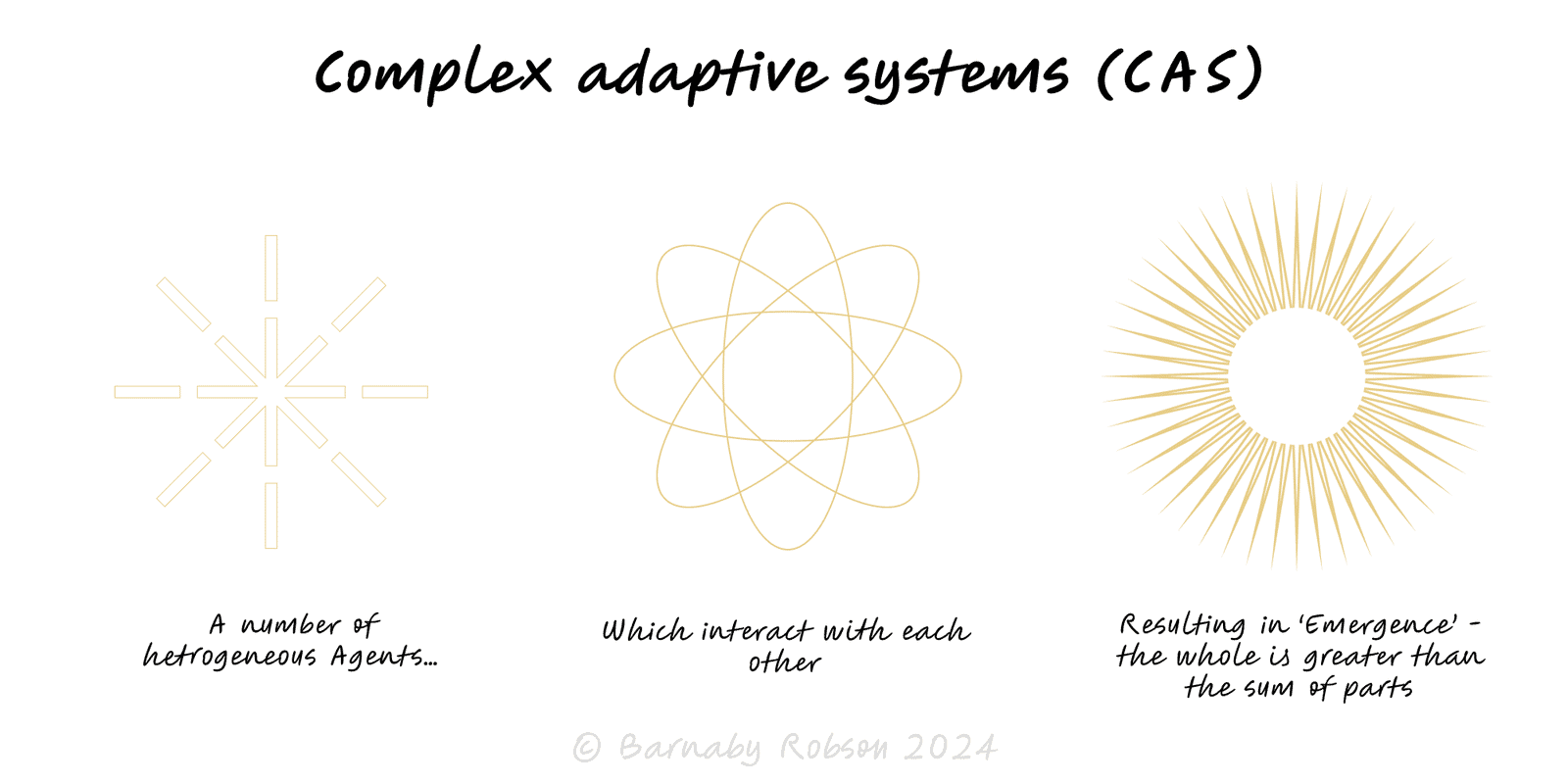

Complex Adaptive Systems

Complexity science (John Holland, Murray Gell-Mann, Stuart Kauffman, Melanie Mitchell; Santa Fe Institute)

A complex adaptive system (CAS) is made of many agents (people, teams, firms, microbes, services) that learn and adapt as they interact. Because interactions are non-linear and full of feedback, the whole system shows patterns you can’t predict by inspecting parts alone (emergence). The practical move is to design conditions—rules, incentives, network links, guardrails—so desirable patterns are more likely to appear and persist.

Agents & local rules – each actor follows simple rules and incentives; global patterns emerge from many local decisions.

Interaction topology – who connects to whom matters (hubs, clusters, weak ties); topology shapes diffusion and failure.

Feedback loops – reinforcing (growth) and balancing (stability) loops, often with delays.

Non-linearity & thresholds – small nudges can trigger big shifts once a tipping point is crossed.

Adaptation & co-evolution – agents change in response to others; today’s solution alters tomorrow’s game.

Path dependence – early accidents and lock-in steer future options; history matters.

Diversity & requisite variety – heterogeneous agents handle a wider range of shocks; monocultures fail together.

Robust-yet-fragile – systems can be resilient to common shocks yet brittle to rare ones; design for both.

Product ecosystems & marketplaces – seed both sides, tune incentives, prevent spam/abuse cascades.

Organisations – teams as agents; culture and incentives as rules; collaboration networks as topology.

Supply chains & operations – buffers, dual sourcing, decoupling to prevent cascades.

Epidemics & virality – model spread, thresholds and targeted interventions.

Financial & risk systems – leverage, liquidity and network exposure create non-linear crises.

Policy & urban planning – small local rules (zoning, pricing) produce city-scale patterns.

Define boundary & purpose – what’s in scope, and what “good” looks like (fitness function).

Map agents, incentives, and links – who acts, why they act, and how they connect (hubs, clusters, bridges).

Surface feedback & delays – list reinforcing/balancing loops; note lags that cause oscillations.

Design simple rules – defaults, constraints and incentives that make good behaviours easy and bad ones costly.

Run safe-to-fail probes – small, parallel experiments; keep what works, kill what doesn’t.

Rewire topology where needed – add/remove links (e.g., routing rules, moderation, team interfaces) to change diffusion and risk.

Add modularity & buffers – isolate components, add slack at bottlenecks, use circuit-breakers for surges.

Monitor early warnings – rising variance, autocorrelation, queue lengths, near-miss rates; watch tail risks, not just averages.

Iterate on a cadence – short observe–decide–act loops; expect the system to change in response to your changes.

Command-and-control bias – micro-managing agents instead of shaping rules and incentives.

Linear planning – straight-line forecasts in a non-linear regime.

Optimising a part – local KPIs that damage whole-system performance (suboptimisation).

Monoculture – uniform processes/tech that increase correlated failure.

Over-fitting one model – treat models as lenses; use multiple and compare.

Ignoring time scales – fast and slow loops collide (daily targets vs quarterly replenishment) and create whiplash.