Thought Experiment

Philosophy & science method (Galileo; Hume; Einstein; later ethicists and cognitive scientists)

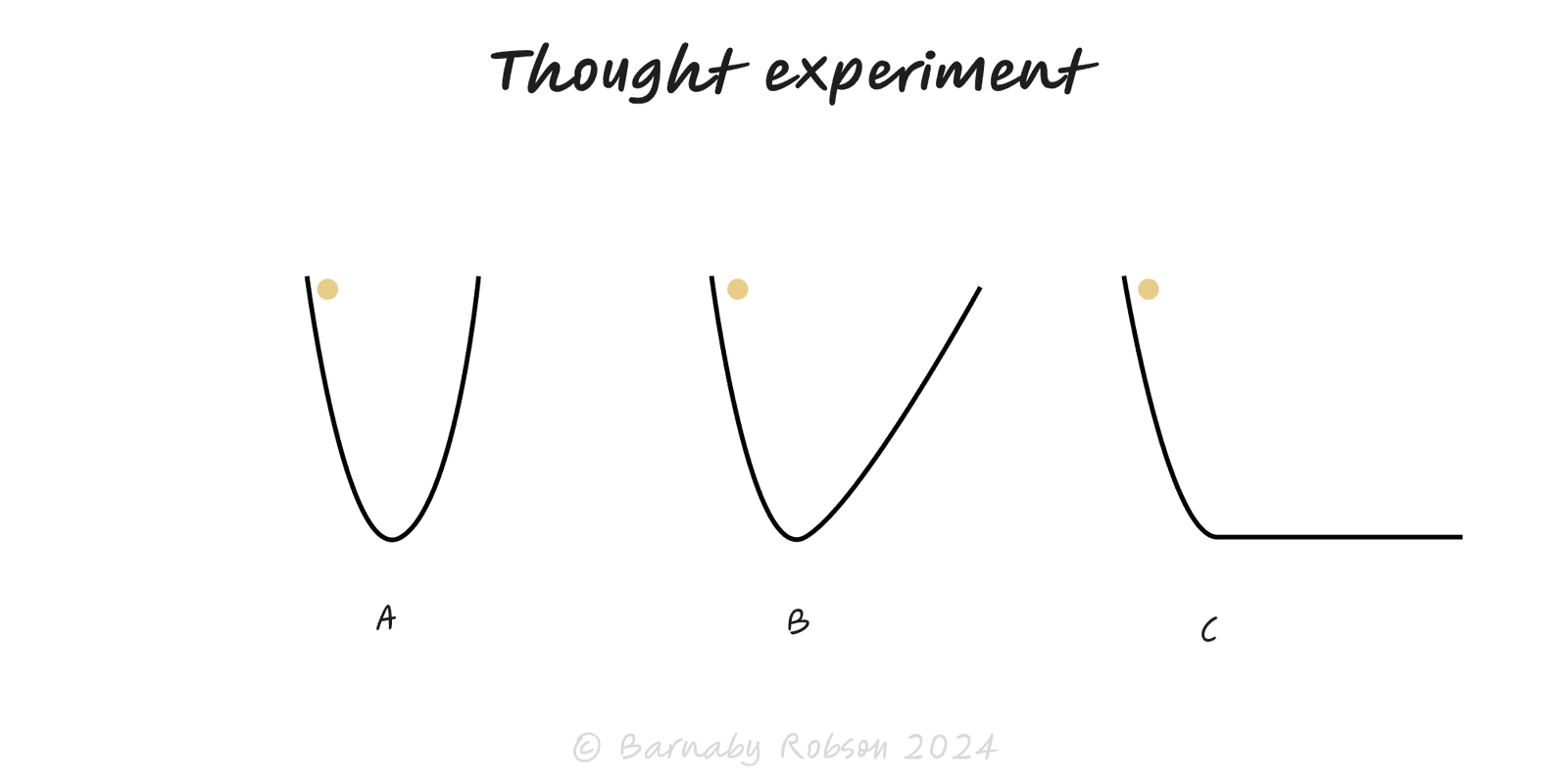

A thought experiment is a structured “what if?” used to learn from reasoned imagination. By idealising away noise and holding key variables fixed, you stress an idea’s logic, ethics, or dynamics. Classics include Galileo’s falling bodies, Einstein’s chasing a light beam, Schrödinger’s cat, the trolley problem, Mary’s room, and the Chinese room. Treat them as tools for clarity, then seek evidence in the real world.

Set the aim – what claim, design, or policy are you testing?

Idealise & isolate – simplify to the essentials; hold non-essentials constant.

Pose the counterfactual – change one variable (“Suppose…”) and derive implications.

Trace consequences – follow incentives, constraints, and second-order effects.

Use techniques –

- Reductio: push the claim to an extreme to see if it contradicts itself.

- Edge cases: test corners where the theory might break.

- Analogy contrasts: compare two close scenarios to locate the crux.

Reconnect to reality – turn insights into hypotheses, decision rules, or tests.

Strategy – simulate competitor moves, regulatory shifts, or tech jumps.

Product & UX – “zero-click”, “offline-only”, or “10× traffic” scenarios to surface constraints.

Risk & ethics – incident drills, trolley-like dilemmas, privacy trade-offs.

Science & analytics – tease out predictions before running costly studies.

Operations – “bus factor 1”, “supplier A fails”, “7-day backlog” scenarios.

Write the claim you want to test (one sentence).

List assumptions (facts, constraints, goals). Mark which are uncertain.

Design the scenario – pick one decisive change (e.g., price = £0; network offline; perfect competitor).

Run the logic – step through actors, incentives, flows, and time. Write what must follow if the claim is true.

Probe edges – best, base, and worst cases; one-way doors; long-tail outcomes.

Extract decisions – what would you do differently if this reasoning holds? What evidence would change your mind?

Operationalise – convert into a field test, metric threshold, or playbook update.

Unrealistic premises – simplifications that remove the crux rather than reveal it.

Intuition pumps – persuasive stories that feel right but skip the hard step.

Cherry-picked edge cases – designing a scenario to “win the argument”.

No bridge to evidence – elegant reasoning never checked against data.

Category errors – importing rules from physics to social systems (or vice-versa) without justification.